ChatGPT eats cannibals

The ChatGPT hype is starting to die down as Google searches for “ChatGPT” are down 40% since peaking in April, whereas net visitors to OpenAI’s ChatGPT website is down nearly 10% during the last month.

That is solely to be anticipated – nevertheless, GPT 4 customers additionally report that the mannequin seems significantly dumber (however sooner) than earlier than.

One principle is that OpenAI has cut up it into a number of smaller fashions which have been educated in particular areas and might work collectively, however not fairly on the similar level.

However an much more attention-grabbing risk may additionally play a task: AI cannibalism.

The net is now awash with AI-generated textual content and pictures, and this artificial knowledge is being exploited as knowledge to coach AIs, making a damaging suggestions loop. The extra AI knowledge a mannequin ingests, the more serious the output will probably be when it comes to coherence and high quality. It is a bit like what occurs once you make a photocopy of a photocopy and the image simply retains getting worse.

Though GPT-4’s official coaching knowledge ends in September 2021, it clearly is aware of much more, and OpenAI just lately shut down its net browser plugin.

A neat acronym for the issue has been devised in a brand new article by scientists at Rice College and Stanford College: Mannequin Autophagy Dysfunction, or MAD.

“Our important conclusion in all eventualities is that with out sufficient recent real-world knowledge in every era of an autophagic loop, future generative fashions are doomed to progressively lower in high quality (precision) or richness (reminiscence),” they stated.

Basically, in an ongoing course of, the fashions are shedding the extra distinctive however much less well-represented knowledge and hardening their outcomes on much less numerous knowledge. The excellent news is that if we will discover a strategy to establish and prioritize human content material for the fashions, the AIs now have a purpose to maintain people knowledgeable. That is one of many plans of OpenAI boss Sam Altman together with his eyeball-scanning blockchain undertaking Worldcoin.

Is Threads only a loss chief when coaching AI fashions?

Twitter clone Threads is a considerably odd transfer by Mark Zuckerberg because it cannibalizes Instagram customers. The photo-sharing platform makes as much as $50 billion a yr, however it ought to make a couple of tenth of that from Threads, even within the unrealistic situation of taking 100% of the market share from Twitter. Massive Mind Every day’s Alex Valaitis predicts that it’s going to both shut down or re-integrate with Instagram inside 12 months, arguing that the actual purpose it is launching now was to “have extra text-based content material that Metas AI fashions on prime of.” could be educated”.

ChatGPT was educated on enormous quantities of knowledge from Twitter, however Elon Musk has taken varied unpopular steps to stop this from taking place sooner or later (charging for API entry, fee limiting, and so forth.).

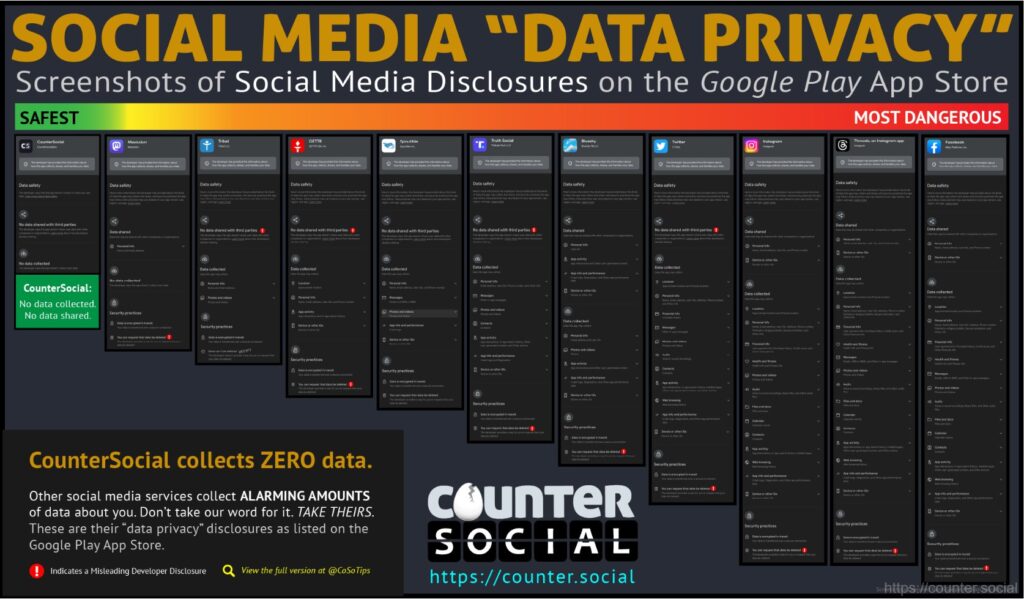

Zuck is on kind on this regard, having educated Meta’s SEER picture recognition AI software program on a billion pictures posted to Instagram. Customers have consented to this within the privateness coverage, and fairly a couple of have identified that the Threads app collects knowledge on every part from well being knowledge to spiritual beliefs and race. This knowledge will inevitably be used to coach AI fashions equivalent to Fb’s LLaMA (Giant Language Mannequin Meta AI).

Musk, in the meantime, has simply launched an OpenAI competitor known as xAI, which can use Twitter’s knowledge for its personal LLM.

Spiritual chatbots are fundamentalists

Who would have thought that coaching AIs with spiritual texts and talking with the voice of God could be a horrible concept? In India, Hindu chatbots posing as Krishna preserve reminding their customers that killing folks is okay whether it is their dharma or obligation.

No less than 5 chatbots educated on the Bhagavad Gita, a 700-verse scripture, have emerged in latest months, however the Indian authorities has no plans to control the know-how, regardless of moral considerations.

“It is miscommunication, misinformation based mostly on spiritual texts,” stated Mumbai-based lawyer Lubna Yusuf, co-author of AI E book. “A textual content provides nice philosophical value to what it desires to say, and what does a bot do? It offers you a literal reply, and therein lies the hazard.”

additionally learn

traits

Regardless of the unhealthy popularity, NFTs generally is a driving drive

traits

Assault of the zkEVMs! Crypto’s 10x second

AI doomers versus AI optimists

The world’s main AI doomer, choice theorist Eliezer Yudkowsky, has launched a TED Speak warning that super-intelligent AI will kill us all. He is unsure how or why, believing an AGI will probably be a lot smarter than us that we cannot even perceive how and why it is killing us – like a medieval farmer making an attempt to determine how a perceive air-con. It may kill us as a facet impact of pursuing one other goal or as a result of “it would not need us to develop different superintelligences to compete with it”.

He factors out that “no person understands how trendy AI programs do what they do.” They’re enormous, inscrutable matrices of floating-point numbers.” He would not count on “marching robotic armies with glowing crimson eyes,” however believes {that a} “extra clever and detached being will develop methods and applied sciences that may shortly and reliably kill us after which kill us”. The one factor that might forestall this situation could be a worldwide moratorium on know-how, backed by the specter of a 3rd world conflict, however he would not assume that can occur.

In his essay “Why AI Will Save the World”, Marc Andreessen of A16z argues that such a place is unscientific: “What’s the testable speculation?” What would falsify the speculation? How do we all know once we’re in a hazard zone? These questions largely go unanswered, apart from “You possibly can’t show it will not occur!”

Microsoft CEO Invoice Gates printed his personal essay entitled “The Dangers of AI Are Actual However Manageable,” by which he argued that “from automobiles to the web, folks have weathered different transformative moments and, regardless of a lot turbulence, have come out higher.” .” finish off.”

“It is essentially the most transformative innovation any of us will expertise in our lives, and wholesome public debate is determined by everybody being conscious of the know-how, its advantages and dangers. The advantages will probably be large, and the most effective purpose to assume we will handle the dangers is that we have finished it earlier than.”

Knowledge scientist Jeremy Howard has printed his personal article arguing that any try and ban the know-how or confine it to some main AI fashions could be catastrophic, and compares the fear-based response to AI to the instances earlier than the Enlightenment when mankind tried to restrict training and energy to the elite.

additionally learn

traits

Retire early with crypto? taking part in with hearth

traits

Australia’s world-leading crypto legal guidelines at a crossroads: The within story

“Then a brand new concept caught on. What if we trusted within the frequent good of society as an entire? What if everybody had entry to training? To vote? Concerning the know-how? This was the Age of Enlightenment.”

His counter-proposal is to encourage open-source improvement of AI and belief that most individuals will use the know-how ceaselessly.

“Most individuals will use these fashions to create and shield. What higher strategy to be safe than when the huge variety and experience of all human society is doing its greatest to detect and reply to threats, with the total energy of AI at your again?”

OpenAI’s code interpreter

GPT-4’s new code interpreter is a good new improve that enables the AI to generate code on demand and really execute it. Something you possibly can consider can generate and run the code. Customers have provide you with varied use circumstances, together with importing firm experiences and making AI create helpful charts of the important thing knowledge, changing information from one format to a different, creating video results, and changing nonetheless pictures to movies. A consumer uploaded an Excel file of every lighthouse location within the US and used GPT-4 to create an animated map of the places.

All killer, no filler AI information

– Analysis from the College of Montana discovered that synthetic intelligence scored within the prime 1% on a standardized creativity check. The Scholastic Testing Service gave GPT-4’s responses to the check prime marks for creativity, fluency (the flexibility to generate many concepts), and originality.

– Comic Sarah Silverman and writers Christopher Golden and Richard Kadrey are suing OpenAI and Meta for copyright infringement for coaching their respective AI fashions on the trio’s books.

– Microsoft’s AI Copilot for Home windows will ultimately be nice, however Home windows Central came upon that the Insider preview is definitely simply Bing Chat, working by means of the Edge browser and nearly capable of activate Bluetooth.

– Anthropic’s ChatGPT competitor Claude 2 is now accessible without cost within the UK and US and its context window can deal with 75,000 phrases of content material, whereas ChatGPT has a maximum of three,000 phrases. This makes it splendid for summarizing lengthy texts and never unhealthy for writing fictional texts.

video of the week

Indian satellite tv for pc information channel OTV Information has unveiled its AI anchorwoman, Lisa, who will current the information a number of instances a day in a number of languages, together with English and Odia, for the community and its digital platforms. “The brand new AI presenters are digital composites created from footage of a human presenter studying the information with synthesized voices,” stated OTV Managing Director Jagi Mangat Panda.

Subscribe to

Essentially the most thrilling studying within the blockchain. Delivered as soon as every week.

Andrew Fenton

Andrew Fenton is a Melbourne-based journalist and editor specializing in cryptocurrencies and blockchain. He has labored as a nationwide leisure author for Information Corp Australia, as a movie journalist for SA Weekend and for The Melbourne Weekly.

Comply with the writer @andrewfenton